Friday, November 21, 2008

Week 10 Creative Computing - Integrated setup - Ableton Sampler

Thursday, November 20, 2008

Audio Arts Major Project

Draft Mix MP3

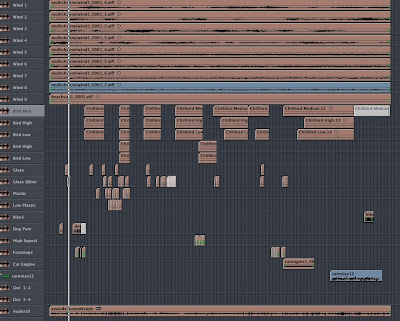

I aimed to recreate the sound of the windy seaside staying quite faithful to the events of the recording I made. The main elements I included were wind, birds, cars, dog, rumble, rummaging, and footsteps. Part of the objective was for the final product to have a hyper-real sheen to it, which allows for some deviation from the original recording.

In order to achieve the hyper-real texture, synthesis was my main approach to sound creation. My main synthesizer was Plogue Bidule, as its flexible modules and routing options allow for a wide variety of timbres. I found some sounds far harder than others to generate.

Wind was a main focus, because it was dominant in the original recording. I created a Bidule patch with 8 channels of noise and independent filters. The frequency of the filters was determined by independent random oscillators, and also a master control, so that the 8 channels are independent but linked to some degree. A rumble adds depth and cinematic hyper-realism. While I have tried to evoke the sea using wind and rumble sounds, I think that the real ocean does make water sounds that my simulation lacks.

Glass was also tackled with Bidule, using an FM synthesis patch with modulation envelopes. After generating files of randomly varied glass sounds, I compiled them in logic to simulate the simultaneous clinking of many bottles. Many different sequences were bounced from separate logic session and later used in the main session.

Percussive hitting sounds proved to be some of the most difficult to recreate. I tried to process white noise, but my results were largely corny and reminiscent of poor films. In this case, I abandoned synthesis and quickly recorded myself hitting various objects on the desk in front of me. By taking small portions of this recording, timestretching them and using EQ, reverb and enveloping, I was able to create some marginally better foley sounds. I think that these percussive sounds are the weak point of the work – particularly the footsteps.

In the final mix, heavy EQ and short reverbs proved to increase the realism of many of the sounds. Pan automation allowed elements such as cars, bikes and dogs to move around.

I’m satisfied with my final product, however I think that improvement is possible, particularly with the rummaging sounds. Through this exersize, I have realised how difficult synthesizing real sounds is.

Creative Computing Major Project

Performance Recording Mix

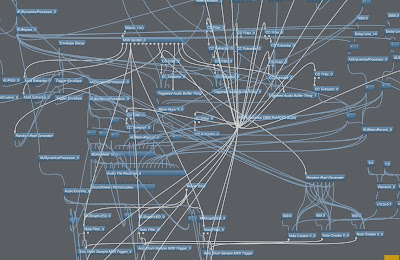

This electroacoustic performance work for trumpet and computer builds from a gentle beginning by layering sound electronically. In order to explore a live improvisation aesthetic, the performance makes use of no pre-existing audio recordings, or rhythmic data. Only a basic form for the work was pre-decided, and the trumpet player largely improvised. This demonstrates that the musical outcome of electronic processing of sound relies little on the source material and more on the types of processes used. The work also explores what defines a piece of electronic performance music. The unique pre-existing aspect of this work is simply a particular processing and temporary recording array that has been configured to be interacted with in a particular way. One computer file, corresponding softwares, and any acoustic instrument are the only materials needed to reproduce the work. Thus, a file replaces a traditional music score.

Every sound that is heard originated from the instrumentalist at some point during the performance. The introduction contains a prominent pulsing that results from realtime processing of the trumpet. In order for the work to progress, the role of the software operator is largely in planning ahead by recording useful excerpts, which can later be creatively manipulated. Jamie was occupied with providing interesting source material through his self-taught trumpet style.

The relationship between the electronics and acoustic instrument are different in different parts of the work. In the gentle sections, Jamie was able to improvise with the setup and receive immediate feedback. In order to create the beat, he interacted with the equipment in a fixed, predecided way by making drum sounds. In louder sections, he is able to play over the music in a traditional manner as if it is separate musician.

Saturday, November 1, 2008

Week 12 Forum - Stephen steers the university battleship through oceans of amusement

Basically we discussed the course (and anything else we that came up).

Wednesday, October 29, 2008

Week 9 Audio Arts - FM Synthesis

Audio Demo

Audio DemoBasic FM Synth

A simple FM synth based on the readings using one carrier and one modulator. The amplitude of the modulator is controlled in such a way that at 1.0, the frequency of the carrier moves between 0 and 2f (f is the note being played).

One envelope controls the overall output, while another controls the modulation depth.

I found that this simple FM setup was actually very effective for recreating real world sounds, particularly metallic percussion sounds. I was so proud of my gamelan emulation (reverb helped), that I had to design some upbeat gamlan elevator music (see audio demo). I think we should install this in the new Schulz elevator when no-one's looking.

Badass FM Synth

I tried expand the concept by using five oscillators linked in a chain so each oscillator modulates the next, so I suppose there are four modulators and one carrier. Each modulation stage has it's own modulation depth envelope. You can take audio feeds from the last three oscillators in the chain and mix them in stereo.

It did create some complex noisey textures, however I found it hard to create anything that was particularly real world by making use of the extra oscillators.

Reference: Christian Haines. "Additive Synthesis." Lecture presented at the Electronic Music Unit, University of Adelaide, 14 October 2008.

Tuesday, October 28, 2008

Week 10 Audio Arts - Additive Synthesis

Can I have the award for artistic patching? Impressionism vs bidule layouts....

Can I have the award for artistic patching? Impressionism vs bidule layouts....Audio Demo

More Extreme Additive Synth (pictured)

There are 16 oscillators, each contained in a group. The oscillators are automatically mapped as harmonics in relation to the defined fundamental, however if you want a rougher tone, they can be scattered slightly using the "Freq Freakout Factor".

The amplitude of each oscillator is determined by a set ratio of the previous oscillator, creating a decreasing exponential curve. For example, if the "amp taper factor" is at 0.5, then every harmonic will be half the value of the previous one.

The amplitude of odd and even harmonics can be boosted and cut.

Frequency, pan, and amplitude can be varied individually using random value generators for each oscillator, creating evolving textures.