Friday, November 21, 2008

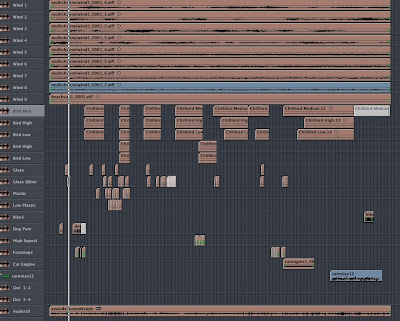

Week 10 Creative Computing - Integrated setup - Ableton Sampler

Thursday, November 20, 2008

Audio Arts Major Project

Draft Mix MP3

I aimed to recreate the sound of the windy seaside staying quite faithful to the events of the recording I made. The main elements I included were wind, birds, cars, dog, rumble, rummaging, and footsteps. Part of the objective was for the final product to have a hyper-real sheen to it, which allows for some deviation from the original recording.

In order to achieve the hyper-real texture, synthesis was my main approach to sound creation. My main synthesizer was Plogue Bidule, as its flexible modules and routing options allow for a wide variety of timbres. I found some sounds far harder than others to generate.

Wind was a main focus, because it was dominant in the original recording. I created a Bidule patch with 8 channels of noise and independent filters. The frequency of the filters was determined by independent random oscillators, and also a master control, so that the 8 channels are independent but linked to some degree. A rumble adds depth and cinematic hyper-realism. While I have tried to evoke the sea using wind and rumble sounds, I think that the real ocean does make water sounds that my simulation lacks.

Glass was also tackled with Bidule, using an FM synthesis patch with modulation envelopes. After generating files of randomly varied glass sounds, I compiled them in logic to simulate the simultaneous clinking of many bottles. Many different sequences were bounced from separate logic session and later used in the main session.

Percussive hitting sounds proved to be some of the most difficult to recreate. I tried to process white noise, but my results were largely corny and reminiscent of poor films. In this case, I abandoned synthesis and quickly recorded myself hitting various objects on the desk in front of me. By taking small portions of this recording, timestretching them and using EQ, reverb and enveloping, I was able to create some marginally better foley sounds. I think that these percussive sounds are the weak point of the work – particularly the footsteps.

In the final mix, heavy EQ and short reverbs proved to increase the realism of many of the sounds. Pan automation allowed elements such as cars, bikes and dogs to move around.

I’m satisfied with my final product, however I think that improvement is possible, particularly with the rummaging sounds. Through this exersize, I have realised how difficult synthesizing real sounds is.

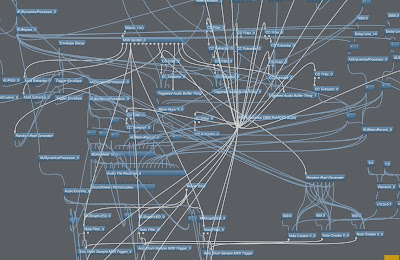

Creative Computing Major Project

Performance Recording Mix

This electroacoustic performance work for trumpet and computer builds from a gentle beginning by layering sound electronically. In order to explore a live improvisation aesthetic, the performance makes use of no pre-existing audio recordings, or rhythmic data. Only a basic form for the work was pre-decided, and the trumpet player largely improvised. This demonstrates that the musical outcome of electronic processing of sound relies little on the source material and more on the types of processes used. The work also explores what defines a piece of electronic performance music. The unique pre-existing aspect of this work is simply a particular processing and temporary recording array that has been configured to be interacted with in a particular way. One computer file, corresponding softwares, and any acoustic instrument are the only materials needed to reproduce the work. Thus, a file replaces a traditional music score.

Every sound that is heard originated from the instrumentalist at some point during the performance. The introduction contains a prominent pulsing that results from realtime processing of the trumpet. In order for the work to progress, the role of the software operator is largely in planning ahead by recording useful excerpts, which can later be creatively manipulated. Jamie was occupied with providing interesting source material through his self-taught trumpet style.

The relationship between the electronics and acoustic instrument are different in different parts of the work. In the gentle sections, Jamie was able to improvise with the setup and receive immediate feedback. In order to create the beat, he interacted with the equipment in a fixed, predecided way by making drum sounds. In louder sections, he is able to play over the music in a traditional manner as if it is separate musician.

Saturday, November 1, 2008

Week 12 Forum - Stephen steers the university battleship through oceans of amusement

Basically we discussed the course (and anything else we that came up).

Wednesday, October 29, 2008

Week 9 Audio Arts - FM Synthesis

Audio Demo

Audio DemoBasic FM Synth

A simple FM synth based on the readings using one carrier and one modulator. The amplitude of the modulator is controlled in such a way that at 1.0, the frequency of the carrier moves between 0 and 2f (f is the note being played).

One envelope controls the overall output, while another controls the modulation depth.

I found that this simple FM setup was actually very effective for recreating real world sounds, particularly metallic percussion sounds. I was so proud of my gamelan emulation (reverb helped), that I had to design some upbeat gamlan elevator music (see audio demo). I think we should install this in the new Schulz elevator when no-one's looking.

Badass FM Synth

I tried expand the concept by using five oscillators linked in a chain so each oscillator modulates the next, so I suppose there are four modulators and one carrier. Each modulation stage has it's own modulation depth envelope. You can take audio feeds from the last three oscillators in the chain and mix them in stereo.

It did create some complex noisey textures, however I found it hard to create anything that was particularly real world by making use of the extra oscillators.

Reference: Christian Haines. "Additive Synthesis." Lecture presented at the Electronic Music Unit, University of Adelaide, 14 October 2008.

Tuesday, October 28, 2008

Week 10 Audio Arts - Additive Synthesis

Can I have the award for artistic patching? Impressionism vs bidule layouts....

Can I have the award for artistic patching? Impressionism vs bidule layouts....Audio Demo

More Extreme Additive Synth (pictured)

There are 16 oscillators, each contained in a group. The oscillators are automatically mapped as harmonics in relation to the defined fundamental, however if you want a rougher tone, they can be scattered slightly using the "Freq Freakout Factor".

The amplitude of each oscillator is determined by a set ratio of the previous oscillator, creating a decreasing exponential curve. For example, if the "amp taper factor" is at 0.5, then every harmonic will be half the value of the previous one.

The amplitude of odd and even harmonics can be boosted and cut.

Frequency, pan, and amplitude can be varied individually using random value generators for each oscillator, creating evolving textures.

Saturday, October 25, 2008

Week 8 Audio Arts - Amplitude/Ring Modulation

Week 9 Creative Computing - Integrated Setup

Thursday, October 16, 2008

Week 10 Music Tech Forum - Honours Presentations

Presentations from the honours students!

Wednesday, October 15, 2008

Week 9 Forum - 3rd Year Presentations!

Wednesday, September 24, 2008

Week 8 Forum - Eraserhead

Friday, September 12, 2008

Week 7 Audio Arts - Analog Synthesizers!

Above is a Roland SH-5 which I attempted to create some organic sounds with. It feels great to be playing with something that isn't a computer. The many controls allow easy realtime control to manually add that modulation you need without bothering to assign things to an LFO or modwheel. I used the pitch lever often to control pitch or filter in order to move it exactly as I needed.

Above is a Roland SH-5 which I attempted to create some organic sounds with. It feels great to be playing with something that isn't a computer. The many controls allow easy realtime control to manually add that modulation you need without bothering to assign things to an LFO or modwheel. I used the pitch lever often to control pitch or filter in order to move it exactly as I needed. Week 8 Creative Computing - Ableton Live 2 - Let's Do the Time Warp Again Please

The Audio File

Week 7 Creative Computing

Thursday, September 11, 2008

Week 7 Forum - Second Year Presentations

Week 6 Creative Computing - More Logic Skills

Week 6 Audio Arts - Interaction Design - Age of Mythology

The menu includes options related to which ancient God you choose to base your civilization around, what kind of enemy you will face, and the layout of the map that the game is set in. Actual gameplay involves controlling the numerous buildings and personnel of your empire. The game is created by Ensemble Studios and marketed primarily to children.

Thursday, September 4, 2008

Week 6 Forum - AUDIO RASA CHARADE FUN GAME PERSIAN BABY

In today's exercise we attempted to recognize sonically represented emotions. Some radically different approaches were used in portraying the "rasa" such as:

In today's exercise we attempted to recognize sonically represented emotions. Some radically different approaches were used in portraying the "rasa" such as:- Sampling different styles of commercial music

- Downloading the pure, instinctive cries of yet-to-be-conditioned babies from youtube

- Traditional western art music conventions

- Moody synthesized textures

- Drawing on the cliches of mainstream cinema

- Speaking about emotional subjects in a foreign language

Sunday, August 31, 2008

Week 5 Audio Arts - Sound Art of Laurie Anderson

Saturday, August 30, 2008

Week 5 Forum - Negativland

Week 5 Creative Computing - Isolation (MIDI Reggae Version)

Isolation MIDI Reggae Version MP3

Isolation MIDI Reggae Version MP3Tuesday, August 26, 2008

Week 4 Creative Computing - Logic!

NIN Ripoff Attempt MP3

Monday, August 25, 2008

Week 4 Audio Arts - Analysing Sound for Ads

Reference: Christian Haines. "Week 4 Audio Arts - Advertisement Sound Design Analysis." Lecture presented at the Electronic Music Unit, University of Adelaide, 17 August 2008.

Saturday, August 23, 2008

Week 4 Forum - Indian Classical Music

Tuesday, August 19, 2008

Week 3 Forum - First Year Presentations

Cool to see what everyone's been up to!

Monday, August 18, 2008

Week 3 Creative Computing - Modding the Spectral Freeze Crossfader

It was already quite complete with a fair few modulation options so I haven't changed much.

It was already quite complete with a fair few modulation options so I haven't changed much.- Check the box to sync the filter frequency to the pitch of the note so you can use it to emphasize certain harmonics or the fundamental and have the filter pick the right range for any note.

- You can modulate the pitch of the original oscillator with another high-frequency oscillator by a variable amount.

- The frequency of the modulator is locked to the carrier and is defined by its ratio to the carrier.

- The original oscillator can now be sent to the outputs bypassing the spectral section and a mix can be created between the original FM section and the freezer section which is also fed from the FM section.

- You can make notes begin with the emphasis on the FM section and then fade towards the more atmospheric spectral section over a variable time.

- Recorded the audio demo with some FX.

- One of them is this stereo modulated delay thing.

- Basically the delay time is modulated by an oscillator and the oscillator is the opposite phase in either channels.

Sunday, August 17, 2008

Week 3 Audio Arts - Deja Vu Sound Design Analysis

Sunday, August 10, 2008

Week 2 Forum - Some of David Harris's favourite things.

The first item was David's latest composition "Terra Rapta" which was written for the Grainger String Quartet. I really enjoyed it, particularly the disjointedness of the rhythms. Perhaps it really helps having such great musicians interpreting the music because they add an extra layer of musicality to the aggressive writing. I find Steven's view on program notes interesting. I think that they can particularly narrow your mind for instrumental music where there is no text and your mind is free to wander. But I understand that this piece was written with a particular theme in mind and so David was keen to express this.

Week 2 Creative Computing - FFT Freeze Crossfader Synth

Sunday, August 3, 2008

Week 2 Audio Arts - Soundscape Analysis

I attempted to record the interesting soundscape of the train, however my phone recording quality turned out to be unusable because few sounds could be distinguished. I then decided to settle for a room soundscape which contained various interesting elements including a TV. I tried to stir up the cats because I hear most people are indifferent to cats and so they won't be offended.

I attempted to record the interesting soundscape of the train, however my phone recording quality turned out to be unusable because few sounds could be distinguished. I then decided to settle for a room soundscape which contained various interesting elements including a TV. I tried to stir up the cats because I hear most people are indifferent to cats and so they won't be offended. Week 1 Music Tech Forum - Listening Culture

Week 1 Audio Arts - Windows 95 Startup Sound

Friday, August 1, 2008

Week 1 Creative Computing - Bidule experimentation

- Separate stochastic note list for each drum

- Different settings for each drum

- Used a transposer to change note from A to desired note

- Various synths fed by a sequencer or stochastics

- Some sounds were created from FFT data created by analysing audio from a synth controlled by both stochastic note list and live midi input

- FFT data resynthesized after processing

- FFT data is converted to midi and triggers synths (claw and waveshaped drum)

- The midi data is then looped back to the synth that feeds the FFT analysis to create an interesting data loop.

- During the audio example performance I played some MIDI data live.

Thursday, June 26, 2008

Creative Computing Project - I Say Concrete Without a French Accent?

Part 1

This section follows the journey of a food preparer using traditional music concrete techniques. The microwave’s whirring sooths the user to a relaxed then they are roused by the chaotic beeping of several timers and the boiling of the kettle. Processing includes reverb, delay, flanger, varispeed and compression. The style of the result is inspired by pioneering concréte artists such as Pierre Henry and Pierre Schaeffer.

Part 2

Sounds of a microwave, kettle, beaters, glass jar and bowl are intensely processed using unpredictable software such as Soundhack and Fscape and sequenced in Pro Tools and Reason. This part draws inspiration from modern electronic artists from the “IDM” subgenre.

Part 3

A return to the style and techniques of classic 50s concrete as in part 1. The dishes are washed, however one cannot resist making music with the sounds of the glasses and cutlery while undertaking this task. The main sounds used are running water, a gas stove being lit, the ringing of a struck knife and the sound of a glass being played percussively.

Wednesday, June 25, 2008

AA Recording Project - The Notorious Daughters 'Rain'

Sunday, June 8, 2008

Week 12 Forum - Scratch Tutorials, Windowlicker

Saturday, June 7, 2008

Week 11 Forum - Philosophy of Music/Environmental Discussion

- Steven Whittington. "Week 11 Music Technology Forum - Philosophy of Music". Lecture presented at the Electronic Music Unit, University of Adelaide, 29 May 2008.

- David Harris. "Week 11 Music Technology Forum - Philosophy of Music". Lecture presented at the Electronic Music Unit, University of Adelaide, 29May 2008.

Tuesday, May 27, 2008

Week 10 Creative Computing - Meta Synth

Sunday, May 25, 2008

Week 10 Audio Arts - Acoustic Guitar

Friday, May 23, 2008

Week 10 Music Technology Forum - Turntablism

Monday, May 19, 2008

Week 9 Creative Computing - SoundHack/FScape/NN19 GlitchFest

Sunday, May 18, 2008

Week 9 Audio Arts - The Viscous Groove of Ska

- 2U87s in omni, spaced pair low over the kit

- Beta52 inside kick 1 inch from beater area

- Beta52 outside kick 30cm from resonant head

- Sm57 on top snare angled inwards

- Beta57 on bottom snare 2 inches from snare

- Beta56 on hi-Tom

- md421 on mid-tom

- md421 on floor-tom

- NT5 2 inches from top of hi-hats

- c414 behind a baffle in the room

- Avalon DI - Bass

- md421 perpendicular to guitar amp

- sm57 facing inwards - guitar amp

- c414 facing at guitar amp

- Cut boxy lower mids, boosted bass, upper mid slap area

- Positioned the kick higher than the bass

- Gated for less mud

- Compressed with long attack for slap

- Cut boxy mid area

- Boosted cutting treble

- Gated for less mud

- Compressed with moderate attack

- Boosted higher frequency slap area

- Cut excessive bass on some toms

- Manually gated via editing

- Cut some bass, lower mids to decrease mud

- Compressed slightly

- Compressed for pumping effect

- Blended together

- Cut chirpy upper mids

- Boosted treble, lower mids

- Cut mids

- Compressed with slow attack

- Cut lower mids, compressed slightly

Thursday, May 15, 2008

Week 9 Music Tech Forum

Tuesday, May 13, 2008

Week 8 Creative Computing - Sampling

Friday, May 9, 2008

Week 8 Audio Arts - Drum Recording

- Soft, polished sound

- Wide stereo spread

- Seperated elements

- Reasonably natural

- Room mic adds depth and body to snare

- The kick does not have very much definition in slap, maybe too much air movement

- Imaging of overheads perhaps a little unstable. Maybe too much separation of mics

- Probably better for most modern, commercial pop/rock

- Low-fi but present tone

- Kick sounds huge and natural and snare cuts nicely, though toms are not very loud or defined.

- Kit elements less separated due to further-away mics and no stereo panning

- Kick and snare can be mixed quite loud while keeping the kit homogenous. Important elements get priority.

- Mono makes drums more compact so they could fit nicely into a mix without dominating

- Nice natural ambience from omni overhead

- Maybe useful for more vintage production styles or to sample in electronic pieces

Thursday, May 8, 2008

Week 8 Forum - Peter Dowdall - Audio Engineering, Session Management

Wednesday, May 7, 2008

Week 7 Creative Computing - Sample Library

- Filtered a clarinet note and cut out a section

- Timestretched it and pitch shifted for a chord

- Automated a sweeping low pass filter and a peak band (for resonance). Two EQ plugins used for heavy boost

- Pitched-down heavily filtered saw wave underneath

- Clarinet portion of above reversed

- Sections of the clarinet case

- Slowed down, lowered in pitch, 2 tracks

- Reverbed

- Automated peak EQ frequency by dragging knob insanely

- Backwards reverb trail created from snippet, left channel pitchshifted up a semitone

- Many layers of pitch shifted saw wave sample with many layers of EQ and volume envelope

- Short toned portion of clarinet case drop (the metal mouthpiece cover rang) timestretched and pitch shifted, placed on multiple panned tracks

- Backwards reverb

- Heaps of tracks with systematically varied panning, EQ peaks and delay time

- Clarinet note and clarinet case excerpts were placed on descending tracks

- Buzzy sound created by clarinet with very short delay

- Clarinet case section with long delay and reverb

- Clarinet, it's case and protools operator playing in a trio

- Beat from clarinet case with pitch shift, EQ, fades etc.

- Amplitube, delay, fades on clarinet

- Edited, reversed, timeshifted clarinet case